Providers and Models

This page adapts the original AI SDK documentation: Providers and Models.

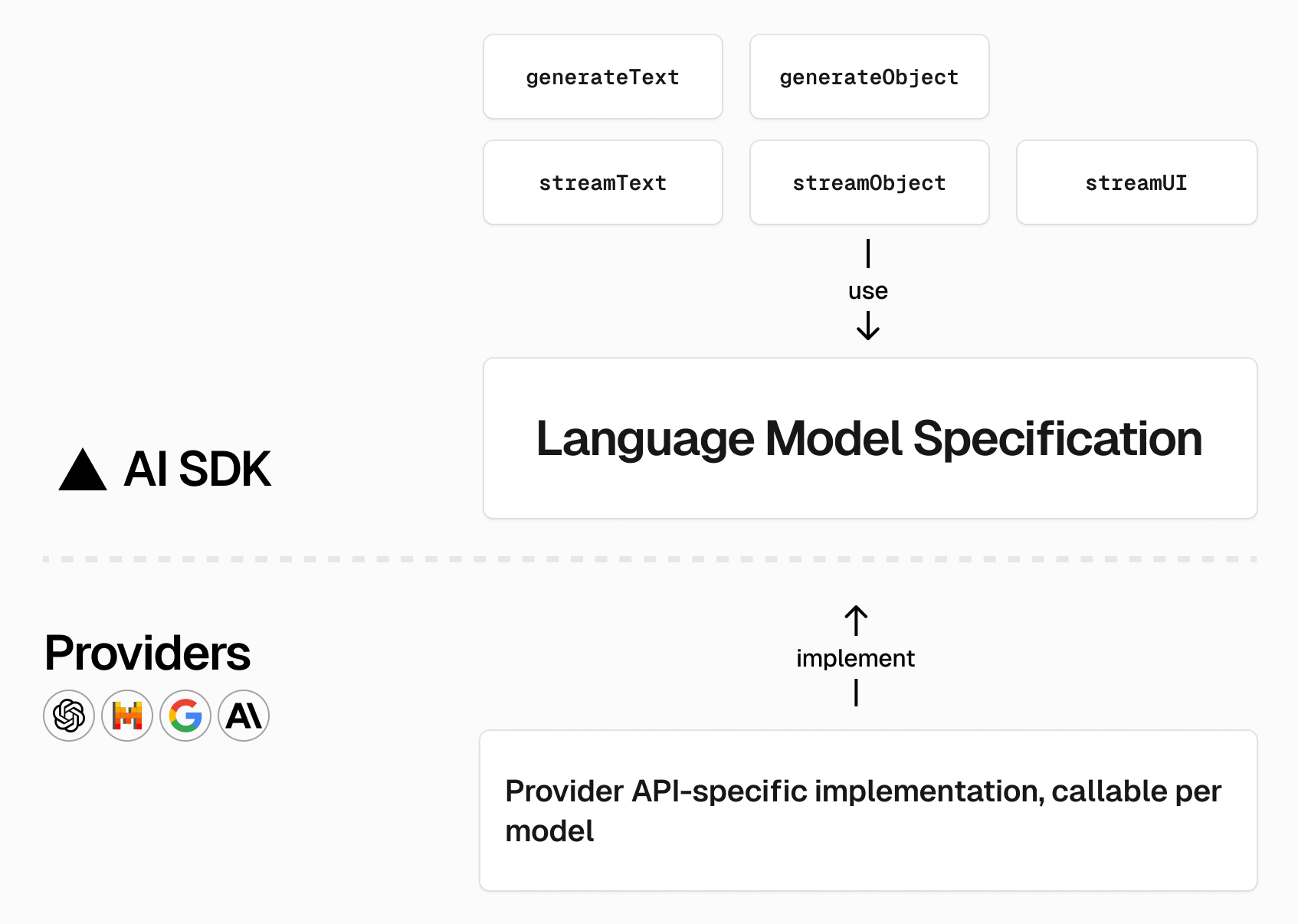

Companies such as OpenAI, Anthropic, Google, and Groq offer access to families of large language models (LLMs) through distinct APIs. Each API has its own authentication story, request/response schema, streaming protocol, and feature gates—making it hard to switch providers without rewriting application code.

The Swift AI SDK mirrors the AI SDK language-model specification (AISDKProvider). Every provider module implements the same typed surface area, so calls like generateText, generateObject, and the streaming helpers stay identical even when you swap vendors.

Swift AI SDK provider modules

Section titled “Swift AI SDK provider modules”The Swift port currently ships with 33 provider modules (one SwiftPM product per provider). Each module exports a lazily configured global shortcut plus a factory for advanced configuration, matching the TypeScript behavior. See Providers for the full, up-to-date list.

A few common examples:

- OpenAI Provider —

import OpenAIProvider, then callopenai("gpt-4o")orcreateOpenAIProvider(settings:). - Anthropic Provider —

import AnthropicProvider, useanthropic("claude-3-5-sonnet")orcreateAnthropicProvider(settings:). - Google Generative AI Provider —

import GoogleProvider, usegoogle("gemini-1.5-pro")orcreateGoogleGenerativeAI(settings:). - Vercel Provider —

import VercelProvider, then callvercel("v0-1.0-md")orcreateVercelProvider(settings:). (Documentation coming soon) - TogetherAI Provider —

import TogetherAIProvider, then calltogetherai("meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo")orcreateTogetherAIProvider(settings:). (Documentation coming soon)

Each shortcut performs lazy API-key loading (via environment variables such as OPENAI_API_KEY, ANTHROPIC_API_KEY, GOOGLE_API_KEY, GROQ_API_KEY) and returns a ready-to-use LanguageModelV3 instance for the higher-level Swift AI SDK APIs.

Self-hosted and upstream-compatible APIs

Section titled “Self-hosted and upstream-compatible APIs”If you are integrating with a custom gateway that implements the OpenAI API surface (for example LM Studio), use the OpenAI Compatible provider. Override the base URL, API key, and optional headers in OpenAICompatibleProviderSettings and the rest of your Swift code stays identical.

The upstream TypeScript AI SDK also maintains a larger catalog of official and community providers. The Swift port already includes many upstream providers, but not every upstream/community provider is available in Swift yet. For the full upstream catalog:

Model capabilities overview

Section titled “Model capabilities overview”| Provider | Typical model identifiers | Text | Embeddings | Images | Audio |

|---|---|---|---|---|---|

OpenAI (openai) | gpt-5, gpt-4.1-mini, gpt-4o-audio-preview | ✅ | ✅ | ✅ | ✅ |

Anthropic (anthropic) | claude-3-5-sonnet, claude-3-opus | ✅ | ❌ | ❌ | ❌ |

Google Generative AI (google) | gemini-1.5-pro, gemini-2.0-flash-exp | ✅ | ✅ | ✅ | ❌ |

Groq (groq) | llama-3.1-8b-instant, mixtral-8x7b-32768 | ✅ | ❌ | ❌ | ✅ (transcription) |

OpenAI-compatible (createOpenAICompatibleProvider) | Depends on upstream service | ✅ | ✅ | ✅ | ✅ |

Model catalogs evolve quickly. Inspect the individual provider modules (

Sources/OpenAIProvider,Sources/AnthropicProvider,Sources/GoogleProvider,Sources/GroqProvider, andSources/OpenAICompatibleProvider) for authoritativeModelIdhelpers and supported feature sets.

By standardizing provider integrations behind the Swift AI SDK interfaces you can experiment with multiple vendors, fail over between services, or mix models in the same application without rewriting business logic.